Designing "The Bionic Harpist"

Longitudinal participatory research on interface design for professional performance.

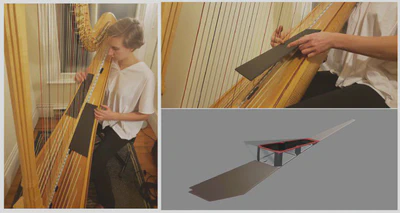

Rehearsing with the Bionic Harp. CIRMMT, Montréal, Canada.

Rehearsing with the Bionic Harp. CIRMMT, Montréal, Canada.The Bionic Harpist is an ongoing project around the research and design of augmented control interfaces for a professional concert harpist. The project has produced three iterations of hardware and software that have been, and still are, used in performances. Read general information about the project here.

Table of Contents

Overview

Alexandra Tibbitts performs with the Bionic Harpist controllers in Montreal, QC. 2021.

tl;dr

This project began in 2016 as a design-research collaboration with harpist Alexandra Tibbitts. The goals were twofold:

- To design bespoke interfaces for a concert harp to allow the performer to play live solo electroacoustic music entirely on the harp.

- To conduct a practice-based longitudinal study of participatory design with — and for — professional performers.

Activities

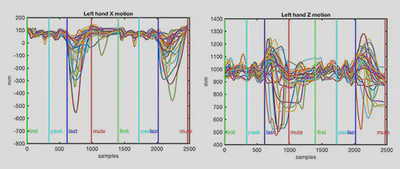

- A motion capture study and analysis of movement and gesture in harp performance.

- Design and implementation of a wireless motion gesture system for augmenting instrumental performance.

- Design and fabrication of hardware interfaces that physically attach to the harp. Two versions have been produced, both used regularly in professional performances.

- Evaluation of participatory co-design methods towards successful adoption and long-term use.

I: Researching harp gesture

The project began with a motion capture study of harp performance. Eight harpists performed excerpts of harp music in a variety of different expressive styles while being recorded in a motion capture studio. Analysis of the performances revealed both instrumental (sound producing) and ancillary (non-sound producing) gestures. Using this as a guide, we experimented with gestures for processing and modulating sound (sampling and looping, and controlling audio effects).

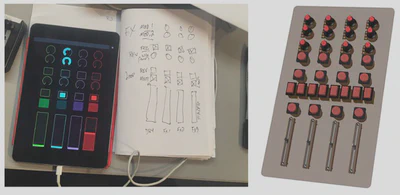

Working with another collaborator, we developed wearable hardware motion controllers and a performance interface that would map the harpist’s movements to standard music software over a wireless network.

The system was put to use in a new performance for solo harp and electronics.

This early work was presented at the MOCO (Movement and Computing) and ICLI (Live Interfaces) conferences.1, 2

II: Co-designing hardware controllers

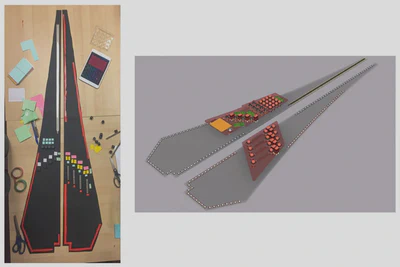

After using the controllers for a period of time, we identified a number of limitations to the system and developed a set of design specifications for a new harp interface:

- Physically augment the harp (vs. open-handed gesture)

- Permits simple configuration into the harpist’s normal performance workflow

- Non-permanent and non-damaging hardware

- Ergonomic and non-invasive design to afford natural expressive performance.

Tibbitts and I co-designed the interfaces with participatory approach that included: ideation and sketching, prototyping a t multiple levels of fidelity (non-functional to functional), CAD design and fabrication, testing, customization, and finally, performance.

Prototyping workflows

Fabrication

Finished hardware and live performance

📽️ First tests of the completed controllers.

Tibbitts has been using the controllers in her professional performance and touring setup since their creation. This has included high profile appearances at the MUTEK festival in Canada, Mexico and (remotely) in Japan.

Alex Tibbitts performing as the Bionic Harpist at MUTEK festival, Montreal, QC.

III: Reflection and iteration

After three years of heavy use, Tibbitts and I began work on an updated version of the controllers for her continuously developing live show. I interviewed Tibbitts to get an understanding of her experiences and needs, as well as to get her perspective on the evolution of the project and continued work.

The interviews provided valuable reflection on this type of design research, and gave a clear roadmap for a new controller design and build, which was completed in 2022. The new controllers preserve the same basic footprint and hardware architecture, however nearly every component and feature has been rethought and redesigned, to provide a more functional and robust controller interface that can withstand heavy professional use.

Research and creative outcomes

On the creative end, Tibbitts is nearing completion of her first studio album “The Bionic Harpist: Impressions”.

On the research end, am preparing a submission for the 2024 New Interfaces for Musical Expression (NIME) Conference that provides an update on the research we have done and provides insights on interface design for professional users. This corresponds with a growing body of research I have carried out around design for professionals and other “extreme” users that place great demands on the technologies they use.3, 4

Sullivan, J., Tibbitts, A., Gatinet, B., & Wanderley, M. M. (2018). “Gestural Control of Augmented Instrumental Performance: A Case Study of the Concert Harp.” Proceedings of the International Conference on Movement and Computing, Genoa, Italy. https://doi.org/10.1145/3212721.3212814 ↩︎

Tibbitts, A., Sullivan, J., Bogason, Ó., & Gatinet, B. (2018). “A Method for Gestural Control of Augmented Harp Performance (performance).” Proceedings of the International Conference on Live Interfaces. Porto, Portugal. ↩︎

Sullivan, J., Guastavino, C., & Wanderley, M. M. (2021). “Surveying digital musical instrument use in active practice.” Journal of New Music Research, 50(5), 469–486. https://doi.org/10.1080/09298215.2022.2029912 ↩︎

Sullivan, J., Wanderley, M. M., & Guastavino, C. (2022). “From Fiction to Function: Imagining New Instruments Through Design Workshops”. Computer Music Journal, 46(3). https://doi.org/10.1162/comj_a_00644 ↩︎